My journey to EQUATOR: There are no degrees of randomness

16/02/2016 In the first of a new series celebrating 10 years of the EQUATOR Network, Doug Altman looks back and reflects on the key events and issues which led to its creation.

In the first of a new series celebrating 10 years of the EQUATOR Network, Doug Altman looks back and reflects on the key events and issues which led to its creation.

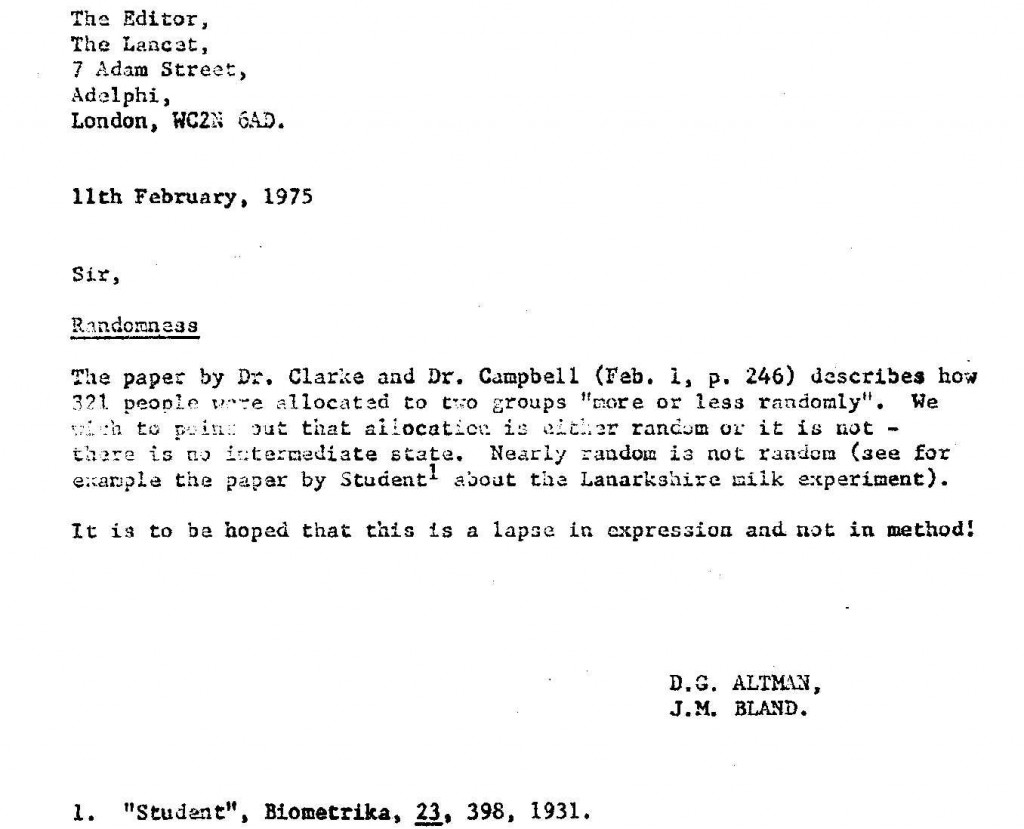

In my first job at St Thomas’s Hospital Medical School, way back in the 1970s, we would take our coffee breaks in the departmental library and so could pore over the latest BMJ, Lancet, etc while drinking our coffee. One report of a randomised trial included a statement that was annoying enough to provoke Martin Bland and me to write our first letter to a medical journal.

The sentence in question was this:

“Three hundred and twenty-one consecutive patients satisfying these inclusion criteria were more or less randomly allocated to groups treated with glibenclamide or chlorpropamide and divided into subgroups according to the type of diet.”

Of course allocation is either random or it isn’t. “More or less” has no place here, or indeed anywhere when specifying research methods. Here is the complete 80-word letter we sent:

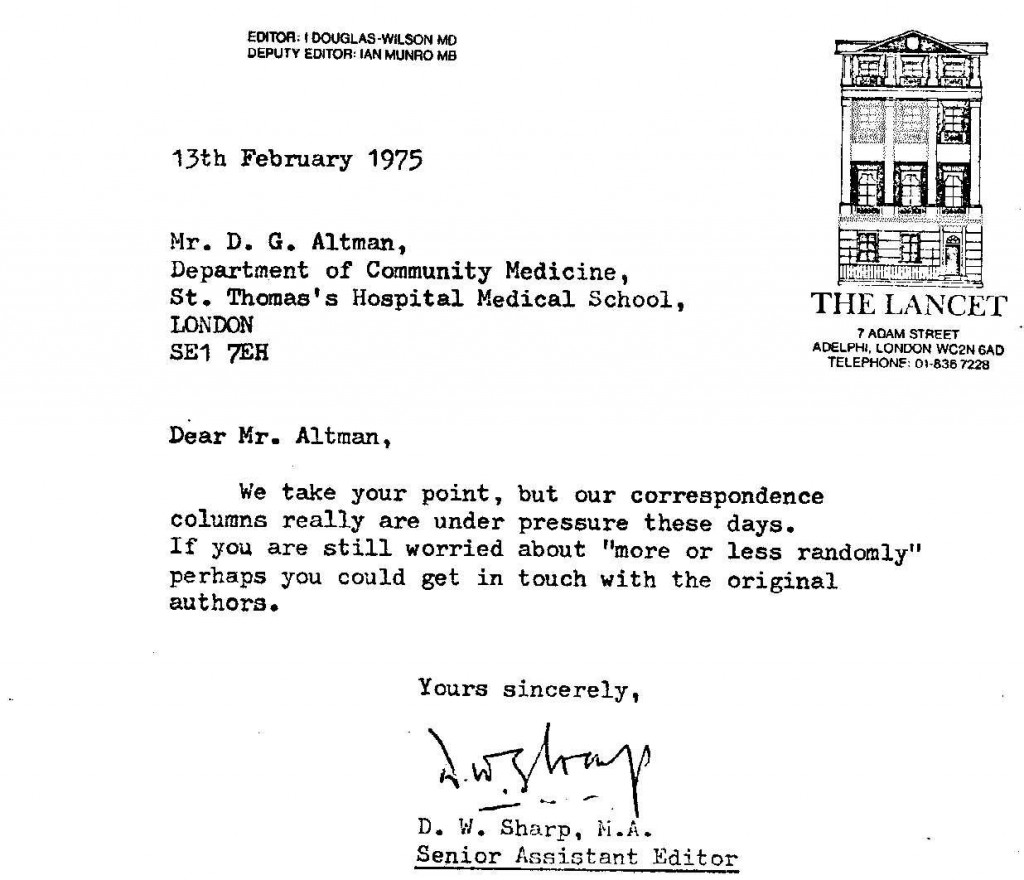

And the even shorter reply

We were disappointed by the reply, especially as our letter was so short. We appealed, with a slightly longer letter, saying that “more or less randomly” was akin to saying a patient was “more or less dead”. That didn’t sway the journal. We wrote to the authors who didn’t reply.

At that time we didn’t recognise transparent reporting as crucially important – few people did.

In the next few years I was more interested in methodological errors in journal articles and guidance for doing statistics well, but I did make occasional references to the need for good reporting of research.

A very important step for me was a review of 80 RCT reports from 4 top general journals (Annals of Internal Medicine, BMJ, Lancet, and New England Journal of Medicine) that Caroline Doré and I published in the Lancet. Early in this article we said:

“Unless methodology is described the conclusions must be suspect.”

All was certainly not well in 1987 in these high-prestige journals. Among the problems we found were:

- A quarter of the 80 reports did not state the numbers initially allocated to each treatment.

- The reporting of the methodology of randomisation was inadequate. In 30% of trials there was no clear evidence that the groups had been randomised.

- Only 26% of trials used an allocation system designed to reduce bias.

- For the 43 trials that did not report using block randomisation the sample sizes in the two groups tended to be much too similar.

We suggested that a report of a randomised clinical trial should include the following statistical information:

i. a description of the trial design (including type of randomisation);

ii. evidence that the allocation was randomised (the method of generation of random numbers);

iii. how the allocation was done, including whether or not it was blinded;

iv. how the sample size was determined; and

v. baseline comparisons, and satisfactory handling of any differences.

We added that also important are whether the patient, the person giving the treatment, and the assessor were blinded.

We ended with the following suggestions:

“Authors should be provided with a list of items that are required. Existing checklists do not cover treatment allocation and baseline comparisons as comprehensively as we have suggested. Even if a checklist is given to authors there is no guarantee that all items will be dealt with. The same list can be used editorially, but this is time-consuming and inefficient. It would be better for authors to be required to complete a checklist that indicates for each item the page and paragraph where the information is supplied. This would encourage better reporting and aid editorial assessment, thus raising the quality of published clinical trials.”

Our paper was perhaps one contributor to efforts to develop guidelines for reporting trials, culminating in the CONSORT Statement in 1996. It may also be why I was asked by Drummond Rennie to peer review the CONSORT paper for JAMA. The following year I was invited to join the CONSORT Group. That in turn led to my involvement in the development of several other reporting guidelines.

As the years went by it became clear that passive publication of guidelines wasn’t likely to change things nearly quickly enough, despite support from hundreds of medical journals. This was the rationale behind the creation of the EQUATOR Network in 2006. Initial funding was from the National Knowledge Service, and we thank Muir Gray for enabling us to get EQUATOR started. This year we celebrate 10 years of EQUATOR. Funding remains a major concern, however. Unfortunately our activities don’t sit neatly within the scope of existing funding programmes. We have suggested that all research funders should set aside a (very) small percentage of their budgets to support activities such as ours that aim to improve the quality and value of the medical research literature.

The last 10 years has seen a big increase in recognition of the importance of reporting of research – we need complete, transparent and honest accounts of what was done and what was found in a study. Those sentiments embed the key ideas that all research on humans should be published and the findings reported in their entirety.

Indeed it is possible that attention has switched too far away from the fundamental issue of avoiding methodological errors, an issue raised very recently in Nature.

Doug Altman, 9 February 2016