It’s a kind of magic: how to improve adherence to reporting guidelines

17/02/2016

Diana Marshall (Senior Managing Editor) and Daniel Shanahan (Associate Publisher) of BioMed Central introduce a pilot experiment starting at four BMC-series journals that aims to help authors find the relevant reporting guideline for their study.

Science is facing a crisis. We seem to hear that every week, but that doesn’t make it any less true. In 2014, Chalmers et al. grabbed the headlines with their analysis that up to 85% of current research is wasted avoidably.

This has served to highlight the importance of transparency and reproducibility. Both require significant effort, but transparency is perhaps an area where journals can have more immediate impact. Promoting transparency and reuse in turn helps improve reproducibility by making it clearer how research has been performed.

In order to replicate the results of your work, a researcher needs to know exactly what you did – the smallest variation in the methods could lead to huge differences in the results. Similarly, before a reader can trust your conclusions, even if they seem supported by the data, they need to know that your approaches were methodologically sound.

Methods in the madness

Reporting guidelines exist to help with this. They specify a minimum set of items required for a clear and transparent account of what was done and found in a research study, in particular reflecting issues that might introduce bias into the research.

This is not new. The SORT guidelines, predecessor to the now widespread CONSORT Statement, were developed over 20 years ago, and a systematic review by Turner et al. suggests that journals endorsing the CONSORT Statement were associated with improved reporting.

But where there were once only a few guidelines, the EQUATOR Network now hosts over 280 of them, covering a huge number of study types from preclinical animal studies to systematic reviews. A journal explicitly ‘endorsing’ them all is impractical, and for authors this can make finding the relevant guideline for a study like finding a needle in a haystack.

Finding the needle

This is why we’re delighted to announce that we have been working with the EQUATOR Network and innovative start-up Penelope Research to help address this. Penelope have created a new tool to help authors find and use reporting guidelines.

Similar to a computer install wizard, this tool uses a simple decision-tree to gather information about a study and guide authors to the relevant reporting guideline for their study, linking directly to the relevant checklist to follow.

We have already assessed the sensitivity and specificity of the wizard, using it to identify the relevant reporting guideline for every article submitted to BMC Nephrology during a one-week period. The results were then cross-checked by an expert, showing both a sensitivity and specificity of 100% (albeit for a limited sample).

A kind of magic?

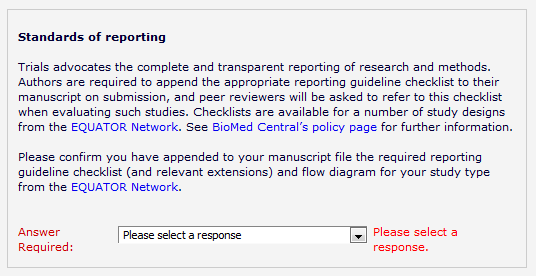

The next step is a structured before-after evaluation of the wizard. Four BMC-series journals – BMC Musculoskeletal Disorders, BMC Gastroenterology, BMC Nephrology and BMC Family Practice – will be updating their submissions systems to require authors to follow the relevant reporting guideline for their study type (if any), prompting them to submit a populated checklist on submission.

We will monitor the adherence to this policy for a month and then, in the following month, we will include a direct link to the wizard, to help support authors in identifying the relevant guidelines.

As well as the overall use of the wizard, for manuscripts submitted during this evaluation we will record if the author(s):

- Stated they followed the reporting guidelines

- Submitted a populated checklist with their manuscript

- Followed the correct reporting guideline

We will then perform a before–after analysis on these data to explore the effect the wizard has.

We very much hope this will be a valuable tool for authors and researchers – and would love to hear your feedback regarding our ‘experiment’. If you have any questions or comments regarding the evaluation or the wizard, please contact daniel.shanahan@biomedcentral.com or diana.marshall@biomedcentral.com.

Diana and Daniel’s article originally appeared on the BioMed Central blog network on 12 February 2016.